What does ChatGPT mean for our education?

Teachers and universities have to come up with a common stance

The chatbot that’s gone viral: ChatGPT. And not without reason, as it is making rapid progress on generating communications so quick and good they are sometimes difficult to distinguish from human writing. Could this be of potential interest to students who prefer having their papers written by this bot?

You might think it comes in handy, a bot that can put together a book report, essay or paper for you. But is it reliable enough? Apart from its use obviously counting as fraud, it’s not always certain the chatbot is actually right. It’s based on machine learning technology, which means that if it’s fed the wrong information, it builds from there. It also doesn’t have a live connection to the internet, instead using a fixed information dataset. And if things get complicated, it may even start hallucinating. More on that below.

Cursor talked to TU/e alumnus Alain Starke, who started recently as an assistant professor at the University of Amsterdam in the field of Persuasive Communication – with a focus on marketing communication in a digital society. Starke is also affiliated to TU/e’s Human-Technology Interaction Group. Needless to say, he played around with the bot a little bit already. “I also follow quite some people that are involved in this and that show the bot can be of help in many areas. As long as you know what to ask, you can get great answers. But asking good questions is not so easy.”

So what’s a good question? “It needs to be specific and there needs to be some context. If it gets too complicated, the bot may start hallucinating and spitting out nonsense.” Which is what happened when Starke tested the bot on a scientific topic. “The bot produced a text in which it cited a paper I had never heard of, from a journal that does exist. I was taken aback: did I miss this contribution to my field? But when I investigated the matter, it turned out the paper didn’t exist. This illustrates you should never just copy a text produced by the bot. It may well be a bunch of coherent sentences that are completely off base.”

Monitoring more important

GPT stands for Generated Pre-trained Transformer, which refers to how the bot was trained with a preset dataset. Starke thinks the bot has pros and cons. “It might come in handy as extra input if you get stuck during your creative process. Or as a programming assistant. But you have to keep checking the answers yourself. The dangers include intellectual property concerns - who’s the author when you use chatbot output?, plagiarism and buying into false information.”

So are you worried students will use it to commit fraud while sitting exams or writing papers? “I’m pretty sure people will try. But I don’t know yet how much of an impact it will have, as it’s difficult to predict students’ behavior in this matter. Yes, these answers are easy to get, but are students willing to take the associated risks? The usefulness also varies per course. But it is a fact that monitoring will become more important and that we need to take another look at improving our exam questions so they actually test a student’s insights.”

It may be a bit easier to use for essays, Starke thinks, "but good apps will most probably be developed to detect language produced by a bot. Chatbots and language models are more predictable in their language use than people. You can see this, for example, in the amount of repetition used. Our main task now is to come up with a common stance as teachers, as a university. Can a student use the output of a bot as a starting point for a report, so they only have to tweak it? We’re okay with using Google to get information, and programmers are allowed to look up their answers in Stack Overflow. So where’s the line?” Of course, a clear difference between Google and ChatGPT is that the former shows the source of the information while the latter doesn’t disclose its sources.

Turing test

ChatGPT, created by OpenAI from San Francisco, generates answers, writes texts and interacts with you in the wink of an eye. But does the bot pass the Turing test? This is an experiment designed by the English mathematician and computer pioneer Alan Turing to test a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human. Starke: “It depends a bit whether you mean the original Turing test or the broader definition we use today. I think it does okay on the original test, despite its limitations. It doesn’t swear spontaneously and will respond to some questions by saying it’s a language model, or a bot. So it varies per conversation whether it could be mistaken for a human. It doesn’t communicate entirely freely, like in the Turing test, so strictly spoken the answer would be no.”

Interview with the bot

If you want to test the bot, it’s of course best to start with questions you definitely know the answers to. “That’s how I started,” Starke says. “Sometimes when I asked it a question with three answer elements, it managed to name two of them. When I narrowed down the context, it got the third one as well. Which is easy to explain,” Starke says. “It’s a kind of probability model. A random example would be that in its database of information, the word ‘beautiful’ is followed by the word ‘woman’ ten percent of the time. But if you narrow down the context, you can eliminate things and it becomes more of a conditional probability."

So by making the question more suggestive, you are giving the bot more opportunities to make its selection, which increases the probability of a right answer. This is a concept you see in people as well: remembering is harder than recognizing. I do a lot of pub quizzes and if I see a brand logo without the brand name, I’m more likely to recognize it than if you asked me what the Reebok logo looks like.”

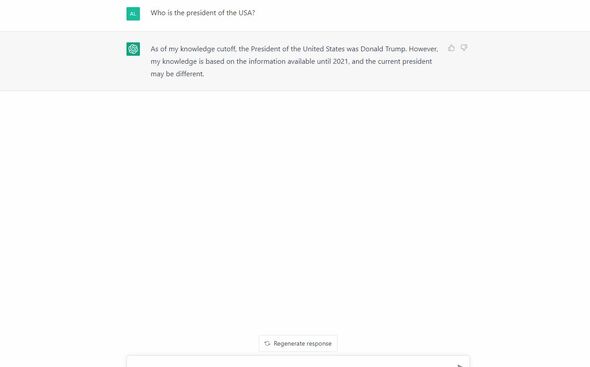

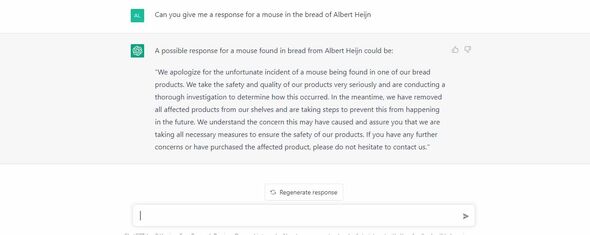

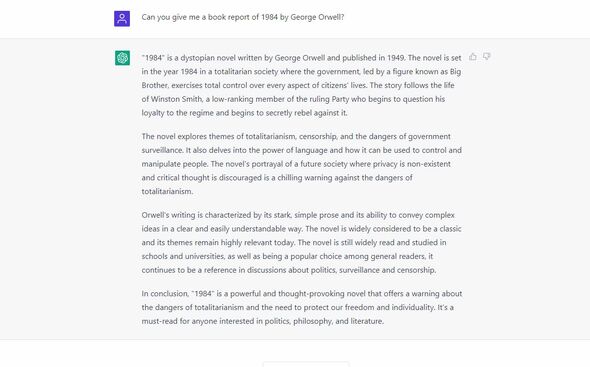

Cursor also asked a few (simple) questions to the bot. Including generating a book report of the novel 1984 by George Orwell. When Cursor was not satisfied with the short book report below we gave the command 'longer', and suddenly we got a much longer and more detailed report.

Click on the images to enlarge them. Want to try it yourself? On https://openai.com/blog/chatgpt/ you will find the chatbot and you can question it yourself. You do need to create a free account for this.

How hot is the bot?

Starke is curious how the role of ChatGPT will develop in society. “Will it still be a hot topic in three months from now, or will everyone be tired of AI by then? Yes, it seems like everyone’s using it at the moment. But if I’ve learned one thing from innovation science, it’s that it’s very difficult to predict the future.” Cursor will continue to follow this topic and would love to get in touch with researchers who are involved or who would like to give their opinions about the implications for research and teaching.

Discussion