International network seeks to open the black box of AI

As part of European staff exchange project REMODEL, mathematicians will attempt to unravel the secrets of machine learning

Read more- The University , Research

- 17/01/2024

International network seeks to open the black box of AI

REMODEL is the name of the ambitious European staff exchange program that recently started. Within this consortium of seven universities, including TU/e, mathematicians from inside and outside Europe are coming together to open the black box of deep learning. This is necessary to gain more insight into exactly how machine learning works and what happens inside artificial neural networks. Cursor spoke with researchers Wil Schilders and Remco Duits who, together with Karen Veroy-Grepl, form the TU/e team that is taking part in the project.

The Marie-Curie staff exchange project REMODEL (Research Exchanges in the Mathematics of Deep Learning with Applications), that was launched in early 2024 for a period of three years, consists of a consortium of seven universities from both within and outside Europe, including TU/e. As part of the exchange program, both permanent and temporary academic staff will spend periods of several months working at another institution within the consortium in order to establish close collaborations. From TU/e, employees - mainly PhD candidates, but also their supervisors - may be sent abroad to a participating university for a total of 27 months.

“Because the EU-funded program aims to promote broad international cooperation, one of the conditions is that employees from European institutions are required to go on exchange to an institution outside the EU,” says Wil Schilders, emeritus professor at TU/e’s Department of Mathematics & Computer Science.

In early 2023, he was personally approached by the Norwegian University of Science and Technology (NTNU), the program’s coordinating university, with the question: ‘Are you in?’ He immediately became enthusiastic and asked his colleagues Karen Veroy-Grepl and Remco Duits if they were on board as well. “Essentially, I’m retired,” he says. Although Schilders was granted emeritus status last June, he remains involved in various projects. For example, he will also take on the role of coordinator for the REMODEL program at TU/e for the initial phase.

The hidden layers of neural networks

The project consists of different work packages, each geared toward specific research areas in the field of machine learning. The researchers involved all have their own areas of expertise, but there is one thing they have in common: they all want to open the black box of AI in order to gain more insight into exactly how machine learning works and what goes on inside artificial neural networks. They aim to accomplish this through mathematical methods.

Machine learning (and the related deep learning) is an important part of artificial intelligence, an area of research that can no longer be ignored. Machine learning is a method to create self-learning systems made up of many layers of artificial neurons that form so-called neural networks. These systems are capable of self-learning based on a large input of data. One of the applications of deep learning is facial recognition. By feeding the network a vast number of pictures you develop a system capable of recognizing faces. The REMODEL project primarily focuses on artificial neural networks to speed up simulations.

“The neural networks start off with an input layer,” Schilders explains. “From the input layer, information is sent to the neurons in the first hidden layer. The neurons have a specific activation function, so they process this information and then transmit it to the next hidden layer, where the same exact thing happens. Eventually, the information is received by the neurons in the output layer - which is the network’s outcome.” So the network consists of information input at the beginning and output at the end. “What happens in between is what we call the black box. We don’t know exactly what goes on there,” says Schilders.

More math for more insight

“And that is precisely the problem,” he continues. “Successes have been achieved with deep learning, but people often don’t know why it works.” Also, the outcomes are not always reliable, he says. “And if the system doesn’t work, people don’t know the reason for that either.” In order to gain more insight into what happens in neural networks, more mathematics should be involved, he believes. “Therefore, we’re going to work together with a total of seven partners within the REMODEL project to explore how we can use mathematics to contribute to a greater understanding of how artificial neural networks operate. This could allow us to better predict whether a method will work or not and how to design a network, exactly how many hidden layers and how many neurons you need, and so on.”

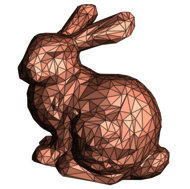

Together with professor Karen Veroy-Grepl, Schilders is part of the work package called “Model order reduction”. He shows a picture depicting three different images of the same rabbit (see image below), ranging from a highly detailed image to a simplified model with rigid geometric shapes. “As you can see, you don’t need all those details to recognize that it’s a rabbit; you can omit a large part of the information,” he explains. Using mathematical techniques, you can isolate the dominant properties, making it easier to solve various mathematical problems. “That way, you can still get very good answers without having to solve the entire big problem with all the details, because the dominant properties are enough.”

This method can also be of great use in the area of neural networks. "Neural networks are often very large, so we’re working on how to reduce them with mathematical methods without losing essential information and while ensuring the black box keeps doing what it needs to do. The trick is not to get rid of too much information, but just enough to be able to calculate more efficiently while getting roughly the same results,” says Schilders.

Real intelligence

To make neural networks more efficient, we need to build more knowledge into them, Schilders believes. Because pure data is not enough. “If you want to train neural networks to predict the behavior of planetary systems, for example, you could measure the orbits for a certain number of years and feed all that data into the network,” he explains. “But if you then ask the system to make a prediction for the years to come, it fails miserably.”

So how do we make it work? “You have to incorporate the laws of physics into the networks, such as gravity and other physical properties,” he claims. “If something falls down, you can choose to make lots of measurements of that and feed all those figures into a network. But Newton formulated the law of gravity for that purpose. It’s much better to incorporate that simple law of physics, which applies in all cases, into a neural network than to feed thousands of numbers into it. It’s much smarter, more efficient and yields better results.” Neural networks that include knowledge of the underlying physics, rather than just data, are also known as physics-informed neural networks. According to Schilders, this is the way to make neural networks better and more reliable.” “You shouldn’t rely on data alone. We have all this knowledge, all these laws, so why not use them?”

ChatGPT is probably the best-known example of a large neural network with a great many hidden layers and millions of neurons per layer. “It contains data from the entire Internet, and yet the system is not all that reliable. This is because you need human knowledge to improve neural networks.” Schilders cites his now famous one-liner that he included in his valedictory speech and that he often uses in his lectures: “Real intelligence is needed to make artificial intelligence work.”

Teaching computers to see better

Remco Duits, TU/e researcher at the Department of Mathematics & Computer Science, will be working on work package 6 within REMODEL, which focuses on solving image analysis problems such as automatic identification of complex vascular systems in medical images. This ties in with his project “Geometric Learning for Image Analysis,” for which he received a 1.5-million-euro Vici grant in 2021.

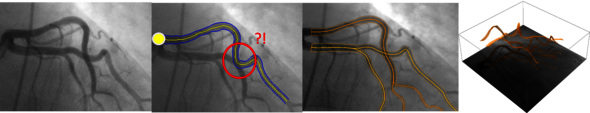

Many medical and industrial images are assessed by computers, but current methods for automatic image analysis fall short, says Duits. There are problems with identifying and tracking elongated structures, for example. In medical images, these might be blood vessels and fibers; in industrial images, they could be cracks in steel or overlapping components in images of computer chips. “These images often contain noise, which complicates interpretation,” he explains. “On top of that, elongated structures are often complex and overlap in the images. This makes it difficult for computers to determine, for example, when two blood vessels cross and when they branch.”

Wrong turn

To illustrate, he shows an x-ray image of coronary arteries (see below) showing vascular structures. In the red circle, you can see several blood vessels overlapping. “With the naked eye you can see where the blood vessels cross, but the AI can interpret it as the blood vessel taking a turn at that point.” Correct segmentation and tracking of blood vessels in medical images is important, for example in early diagnosis of diabetes. And when a physician needs to insert a catheter into a patient, they also have to be able to properly navigate it through the veins using medical images. A misinterpretation of the image, often at an intersection, can cause the tracker to take a wrong turn. Therefore, it is of essential importance to develop a method that automatically tracks blood vessels with correct output, without being affected by intersections or noise.

Duits is working on developing reliable AI methods for image analysis that can prevent interpretation errors. “With deep learning, a model can be trained on data to identify blood vessels. But this requires a lot of high-quality data, which is not always at hand, and processing this data takes a lot of computing power,” he explains. Like Schilders, Duits is convinced that mathematics is needed to understand exactly what happens in neural networks. And once you understand how it works, you can use mathematical methods to design better neural networks that are also more broadly applicable in both medical and industrial image analysis.

Copycat

“What we may not always realize is that we humans are incredibly good at interpreting images,” says Duits. “If you look at me, you instantly see my face, where my shirt is and where the table starts. That’s because we have smart little filters in our visual system.” He picks up a pen from the table and spins it in the air. “Look, when I rotate the pen, you still recognize it as a pen. To us, that’s obvious, but neural networks still have to learn that. You could teach them by feeding lots of data into the network, but that’s a tedious and laborious process. Instead, you can use mathematical methods to design a network in such a way that this information is intrinsically embedded in it. That way, you don’t have to train the network, so you don’t need all that training data.”

How people look at images - so-called neurogeometry - is expressed mathematically by means of PDEs (partial differential equations). “With PDEs you can make neural networks more efficient, so you need less training data while still getting better results,” he says. In order to develop better analysis methods, Duits adds this geometric information to the neural networks. “We’re essentially just playing copycat. We copy off of our own visual system to teach computers to see better. We do that by training purely geometric meaningful association fields (instead of ad hoc activations).”

Like Schilders, he is convinced that you need to incorporate physics expressed in mathematical models into the design of your network. “That’s how you build actual intelligence into it. More and more we come to realize that it’s the smart thing to do. It’s a fundamentally different approach and it results in networks that work very differently.”

A revolution is happening in the field of AI and so many mathematicians are working on it. That’s why it’s very important to stay connected

A step further

Our main goal in the REMODEL project is to bring together all mathematically oriented machine learners from both inside and outside of Europe to engage in meaningful interactions so they can join forces, he says. “That way, we can not only get a better understanding of neural networks, but also reduce them, improve them and make them more efficient,” he argues. “A revolution is happening in the field of AI and so many mathematicians are working on it. That’s why it’s very important to stay connected. We all benefit from encouraging and advancing each other.” According to him, REMODEL also provides opportunities for young researchers to expand their network beyond the European network. “For example, TU/e PhD candidates Nicky van den Berg and Gijs Bellaard will be going on a collaboration visit to Emory USA,” says Duits.

He hopes that the REMODEL program will yield important insights about machine learning that will take us a step further. “You can get excellent results with neural networks, but until you understand how they actually work, you’re not working on what really matters. We need to get a better understanding of what happens, and the universal language of mathematics is the perfect tool to share and spread that knowledge.”

Discussion