Defending the reliability of science

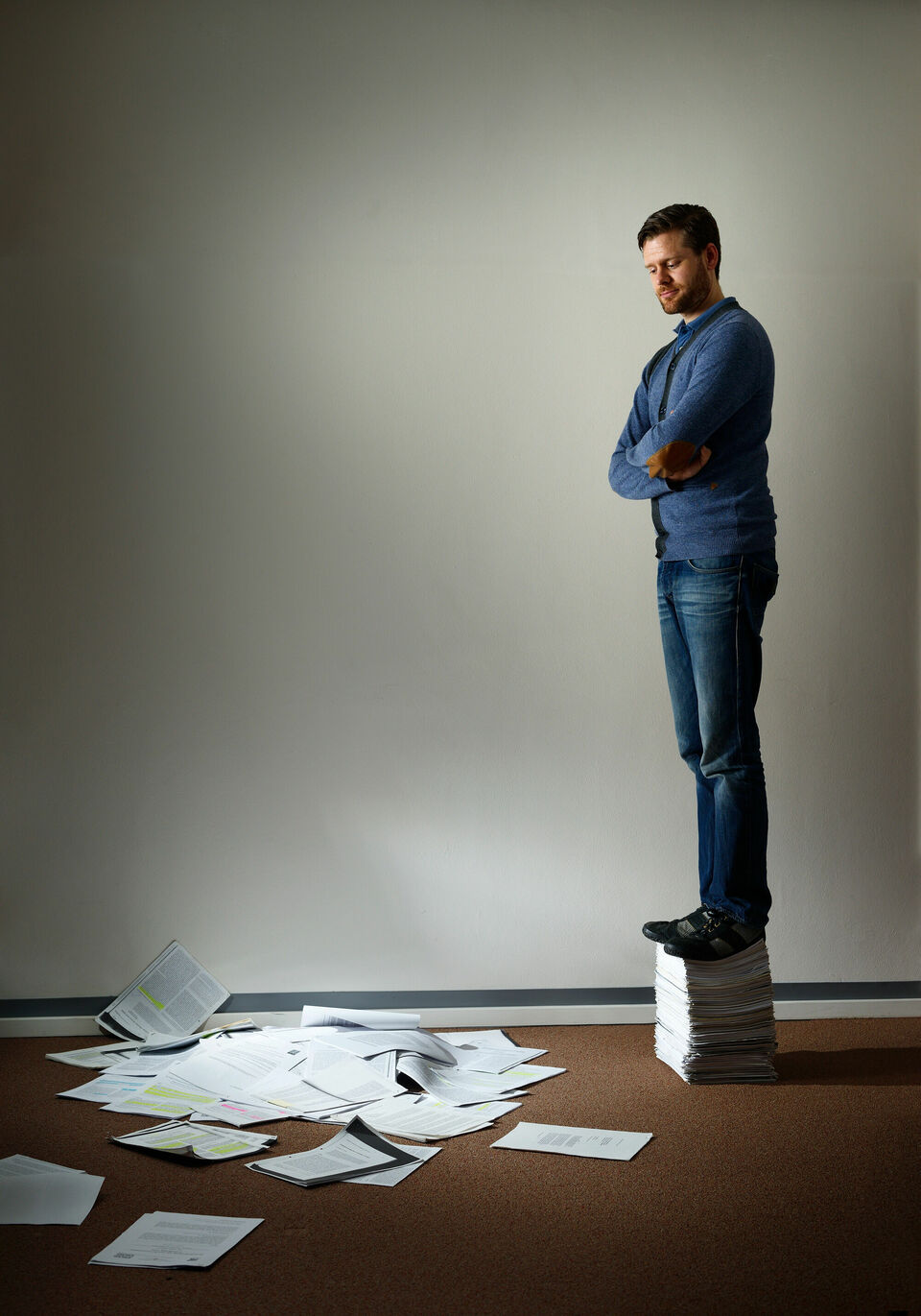

Study something once and it doesn’t count, study something twice and you’re halfway there, study something thrice and you’ve got yourself actual scientific research (if you’re lucky). It’s the importance of repetition in science, worded by dr. Daniël Lakens. The psychologist of the department of Human-Technology Interaction wants to establish a new make-up of science with more room for replication research.

The adage of today’s research is ‘publish or perish’. Grants and appointments largely depend on the number of articles candidates have published. Without a proper resume including numerous publications it’s hard to get a foot in the door with financers like NWO. And considering scientific journals are interested in publishing innovative studies only, really, researchers think twice about exactly replicating their colleagues’ work. However, they should, because it’s the one way to be able to determine the reliability of psychological and medical research, specifically. One could even argue that reproducibility is one of the defining characteristics of science.

The realization that the emphasis on trailblazing research -for financers as well- could be detrimental to the robustness of scientific ideas is hardly an issue outside scientific circles, Daniël Lakens concludes. “Policymakers have this ideal image of scientists who aren’t burdened by their mortgages and are solely driven by scientific motives. That image may apply to a few extraordinary scientists, but the bulk of researchers consider publication their top priority. There’s just no way around the perverted incentives from scientific financers and publishers. Everything should be innovative and published quickly.” And this tendency might very well be at the expense of science.

Last February, the TU/e psychologist visited research financer NWO, which had invited him following his plea for replication research he and two colleagues had published in professional journal De Psycholoog. “We were asked to write a short article for NWO’s own magazine, in which we compared replicated research with ‘Brussels-sprouts science’: very healthy for science, but not very tasty. As long as Brussels-sprouts science isn’t encouraged and financed, nobody will give it a go. NWO responded to the article by saying they didn’t mind financing replications, as long as they were innovative! In a fit of annoyance, I sent them an e-mail.” As a quite unexpected result, he was invited to the board.

Lakens explained to the NWO board that with their focus on innovation they are neglecting part of their job. “By law, organizations like NWO have two tasks. Not only are they supposed to promote innovative research, but also to guard the quality of science. And those two tasks are currently hindering each other. I therefore proposed to start awarding smaller grants of up to five thousand euro meant especially for replication research. These studies are really not that expensive most of the time.”

Awaiting NWO’s answer, the psychologist is working on an international alternative. “My US colleague Brian Nosek has founded the Center for Open Science, an NPO for the realization of replication research, among other things. I’m working on a special issue of Social Psychology with Nosek currently, for which researchers are invited to submit a plan for replication research. If it’s approved, they’ll receive financing for their project and a publication guarantee.”

The setup for the special edition seems to work. “We’ve already received more submissions than we’ll be able to publish. It proves you don’t have to move mountains to persuade scientists for this type of research. All it takes is a minor restructuring of the scientific process. On top of that it serves as a type of precautionary measure, since researchers will come to realize their possibly shoddy research could be repeated just like that. Some colleagues are seriously opposed to this type of large-scale replication projects. They fear it will only lead to bad publicity.”

The Social Psychology special Lakens is working on is part of a larger movement that’s surfaced over the past years. For example, the Center for Open Science is running a major Reproducibility Project within which many more results from psychology are being replicated as accurately as possible. A digital platform dubbed the Open Science Framework has been set up for researchers to share their data, announce plans for experiments, and establish collaborations more easily. The renewed enthusiasm for ‘tedious’ replication can be ascribed to two recent issues in his expertise, according to TU/e’s Lakens. “There’s Diederik Stapel’s fraud, of course. An entirely different issue, concerning Daryl Bem, may have had even more impact, however (see box). The problem with the latter was probably the use of incorrect statistical methods.”

In his crusade against perverted incentives in science, Lakens wrote a critical article on the Dutch ‘top-sector’ policy for newspaper NRC Handelsblad. According to the author, due to that policy the industrial partners that scientists are forced to work with are given too much control. Why is the young assistant professor so worried while many of his colleagues just shrug and continue to add to their list of publications?

“I’m not the conformist type. And as soon as you’ve realized what’s wrong, there’s no denying it anymore. Like Thomas Kuhn said: once your eyes are opened… Anyway, I’m in a good position to criticize the system. In my area of expertise it’s relatively easy to prove effects with only a limited number of test subjects, so I publish quite a lot.”

Because Lakens isn’t as pressured by the need to publish as many of his peers are, he’s less susceptible to claims his criticism stems from self-interest only. “On the other hand I see excellent colleagues, those who go the extra mile by selecting extra subjects and replicating their own experiments, who have to worry whether or not their contracts will be renewed. Considering I’d like to be working with nice, expert colleagues for the next thirty years, I guess you could say my attitude may partly be attributed to self-interest.”

Daryl Bem and the future

Daryl Bem is a renowned psychologist who has taught at Harvard and Stanford, among other institutions. In 2011 he published an article in the Journal of Personality and Social Psychology titled ‘Feeling the Future: Experimental Evidence for Anomalous Retroactive Influences on Cognition and Affect’ in which he claimed to have proven in no less than nine studies it’s possible to foresee the future – participants responded differently before having been exposed to erotic images, than before looking at neutral pictures. This supposed reversal of cause and effect, apparently statistically significant, caused quite a fuss. Assuming the effect doesn’t exist and the researcher acted in good faith, the statistics must have been incorrect. “As a result from that issue, many colleagues decided it was time to improve our statistical methods collectively. That insight has led to a wave of innovation.”

Lies, big lies, and statistics

If a certain scientific result -‘milk is good for your health’, for example- is published in a respected peer-reviewed periodical, it doesn’t mean milk is in fact healthy. There’s always a statistical possibility, which is usually less than five percent (the p-value), that milk isn’t healthy at all and possible even bad for you. Scientists are aware of this.

What they’re not as blatantly aware of, says Lakens, is that the statistical ‘power’of the average psychological study is a mere fifty percent. “It means that should there be a significant effect, the chances of proving this effect are the same as correctly guessing heads or tails when flipping a coin.” The majority of the fifty percent that doesn’t yield any relevant results ends up in a desk drawer. “And that’s one of the reasons the ratio between false effects and reliable effects in literature is worse than we’d want it to be.”

The above problem doesn’t occur in psychology exclusively. Research indicates that the chances of positive reproduction of medical research are lower still. The possible implications are not hard to imagine.

Discussion