Debating the dark side of AI

Autonomous weapons causing casualties in Ukraine, or the benefits affair in our own country: we don’t have to look far to find the dark side of artificial intelligence. Together with EAISI, the Center for Humans and Technology invited cyberpunker Bruce Sterling and Internet pioneer and philosopher Marleen Stikker, among others, to discuss how we can bring AI ‘to the light’. And this discussion topic called for a futuristic location: the Evoluon.

Cyberpunk is a dystopian science fiction genre in which computers – robots, cyborgs and other artificial intelligence – have taken control. As one of the pioneers of cyberpunk, Texan author Bruce Sterling was uniquely qualified to speak at the event called “AI FOR ALL – From the dark side to the light” that took place this Friday afternoon, November 25.

Sterling is impressed with the “flying saucer” – the Evoluon – where he is received, and in turn, moderator Koert van Mensvoort (pictured left in the photo above) is thrilled to see Sterling live. After all, he is one of the ‘future thinkers’ featured in the portrait gallery of the RetroFuture exhibition, which will be on display at that same Evoluon until March. TU/e fellow Van Mensvoort is director of Next Nature, the organization behind the exhibition.

Glass shards

‘Generally speaking, the stupider artificial intelligence is, the better I like it!’ is a quote by Sterling, and he is eager to show the audience – both in the room and via livestream – why that is. He does so using images he created by giving instructions to a text-to-image generator.

As it turns out, the generator struggles with even the simplest of instructions: a checkerboard full of errors, a labyrinth without an entrance. And generating an image of a hand proves to be an entirely impossible task: four fingers, anatomically impossible positions. Why is that?

“Because we lack the words to describe the many ways we can position our hands. What you see is that the generator doesn’t draw; it generates. This is nothing more than automated statistics: images composed of probabilities that result from data files, pixel by pixel.”

So, the AI just repeats the same trick over and over again. Moreover, it lacks a lot of aspects of human intelligence, Sterling shows: he had the generator create an image of a little boy happily wolfing down his breakfast of glass shards. Neither common sense nor ethical considerations prevented the generator from complying with his instructions to the letter.

Gloomy

Marleen Stikker is one of the founders of Waag, an organization that explores the intersections of technology and society. She wrote the book Het internet is stuk – maar we kunnen het repareren [The Internet is broken – but we can fix it] (2019).

Last Friday at the Evoluon, she was the reluctant bearer of gloomy news, because AI is broken as well, and it is in the hands of companies run by billionaires who are much more interested in our personal data than in our well-being.

And that is indeed a “gloomy” realization. A descriptor that, as several speakers point out, perfectly captures the atmosphere of the AI-generated images from Sterling’s presentation: all of them are characterized by an alienating otherness.

So we are faced with a problem. AI is supposed to save us, after all: data analytics, machine learning, deep learning – they are all magic words of progress. Yet there is also something structurally wrong with this promised land of AI.

Privilege

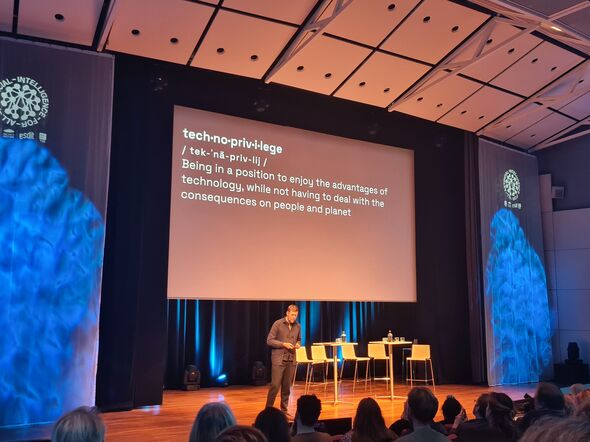

Hendrik-Jan Grievink, designer at Next Nature, has also observed, to his discomfort, how technological progress excludes groups of people and forces them – further – into the periphery. Think of unregistered refugees or digitally illiterate elderly people. An anecdotal example: “Since I always pay with my smartphone, I never carry any cash on me to buy a street newspaper.”

Grievink coined the term ‘technoprivilege’ and designed a measuring instrument similar to Joris Luyendijk’s seven check marks. He used this to collect data during DDW, something he eventually plans to do worldwide, in order to identify in what ways people benefit or suffer from technological progress. Curious about your own technoprivilege? Check it here.

Mandatory label

To bring the event to a close, the three speakers enter into a discussion with TU/e scientists Panos Markopoulos and Kristina Andersen (third and second from right in the photo above), both of whom work at Industrial Design and EAISI. How do they think we can make AI ‘for all’? Markopoulos, professor of Design for behavior change, believes science has a role to play in making technology accessible to more people.

Uhd Andersen argues against the crippling fear of AI as an intangible force: AI itself is and will remain a tool; any danger lies in the intentions of the people who operate it. She suggests several measures that could reduce inconveniences surrounding AI in everyday life: “A kind of spam filter or adblocker, but specifically designed for AI-generated junk. Or a mandatory label, so that you’ll always know when you’re dealing with a product created by AI.”

Data commons

Meanwhile, Marleen Stikker feels compelled to reiterate the seriousness of the matter. “When things go wrong, our reflex is: regulate.” The EU has been doing that, for example, by means of the new Digital Markets Act. But is that enough in this case?

“There is a growing political movement advocating for data commons: a system in which public values determine how our data are protected – instead of neoliberal, market-driven parties. In order to change how technology develops within our society, we need to rethink our economic model.”

In his concluding remarks, TU/e professor Wijnand IJsselsteijn, scientific director of the Center for Humans and Technology, expresses his thanks for lending that activist perspective: “We don’t have to go along with the rhetoric that says ‘it’s something that simply happens to us’. We can influence the direction in which AI will develop – and we can impart that view to our students as well.”

Discussion