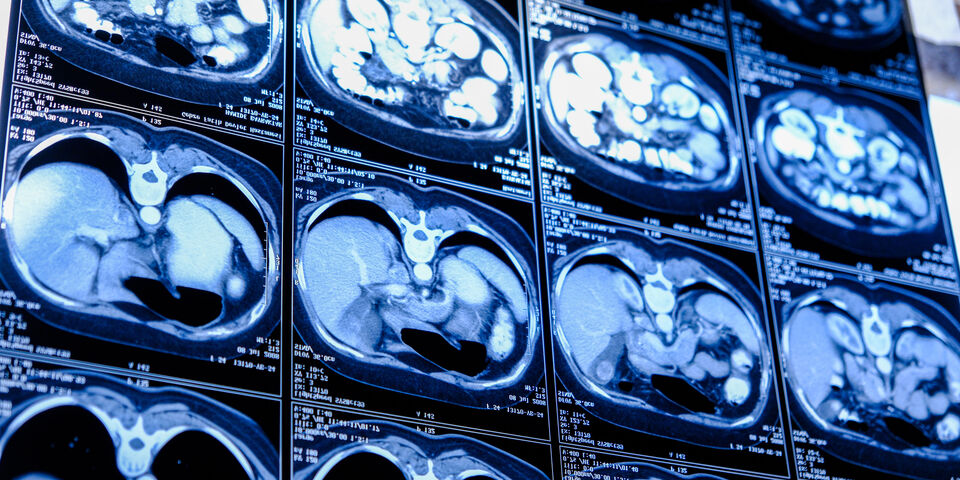

AI can spot patterns in CT scans thanks to supercomputer

Researchers have developed an AI model to analyze CT scans using a new TU/e supercomputer. The technology could form the basis for future applications in cancer detection. Thanks to the immense computing power, the researchers were able to train the model with a quarter of a million images.

The project involves a foundation model, a model that serves as a base for other applications. By training it with 250.000 CT scans — sometimes including corresponding radiology reports — the model learns to recognize patterns within those images. “It has no idea what it’s looking at,” explains the model’s creator, Fons van der Sommen. “It doesn’t yet recognize that something is an organ or abnormal tissue, but it does see that certain parts of an image belong together. You should think of the model as a stem cell—it has the potential to become anything, but by itself, it’s nothing yet,” says Van der Sommen.

“The model forms the foundation on which you can build to make it suitable for specific applications”, says Van der Sommen, who conducts the research as an associate professor at Electrical Engineering, together with two PhD candidates. These applications involve detecting various types of cancer, including lung and ovarian cancer.

Never had this before

According to Van der Sommen, developing a foundation model is not easy—it requires extensive technical expertise, powerful hardware, and large datasets. Until recently, it wasn’t even possible to create such a model at TU/e. That changed with the arrival of the new supercomputer SPIKE-1, says Wim Nuijten, scientific director of the Eindhoven Artificial Intelligence Systems Institute (EAISI), who took the initiative for acquiring the computer. TU/e leases it from the American company NVIDIA (see text box).

“The computer doesn’t compare to U.S. data centers with hundreds of thousands of graphical processing units (GPUs), but ours — though equipped with 32 GPUs — uses much newer technology. And unlike those data centers, where computing power is shared among millions, we have full control over SPIKE-1. That means we now have computing power we’ve never had before,” says Nuijten.

That’s confirmed by PhD candidate Cris Claessens, who works on the project. He estimates that SPIKE-1’s computing power is roughly a hundred times greater than that of the supercomputer previously used at TU/e.

SPIKE-1

The university has entered into a life cycle management (LCM) contract with NVIDIA, an American producer of computer hardware. This means the computer is not depreciated over several years but renewed every two years, ensuring it always runs on the latest technology. SPIKE-1 is not physically located on campus but in a data center in Kajaani, Finland. Researchers in the Netherlands can access it remotely. TU/e is one of the first institutions to use this system from NVIDIA.

According to Van der Sommen, the most important feature of SPIKE-1 is that all GPUs are interconnected, allowing them to work together. This enables the AI model to process large batches of data simultaneously. “The larger the batches, the better the model’s performance,” he explains. “If you feed the computer chunks of information that are too small, the learning process becomes chaotic and less effective. Without this supercomputer, we could never have built this model—it wouldn’t have learned efficiently enough and ‘seen’ enough data at once.”

For the model’s development, the researchers used a method called self-supervised learning. “In that approach, you don’t label each image to indicate whether it shows cancer or not, as is usually done. Instead, the system learns on its own what a typical CT scan looks like by comparing all images to one another,” says Van der Sommen.

Cutting-edge technology

It might sound simple: take a powerful computer, feed it a massive dataset, and wait for results. In reality, it’s more complicated. SPIKE-1 is a hypermodern computer—so new, in fact, that not all of its features are yet supported by software, says Claessens.

“With this computer, it’s technically possible to load data directly into the GPUs instead of first going through the CPU,” he explains. “NVIDIA engineers know the hardware can do it, but there’s no software yet to make it happen. They often leave that kind of development to the community.”

To get all the software running, Claessens says, you need to upgrade everything to a state-of-the-art level, because older software simply doesn’t account for this capacity. “It’s true pioneering work with such a new computer,” adds Nuijten. “Thanks to the technical knowledge within our group, we managed to make it work. Not everyone can handle a system like this. The expertise we gain is shared across TU/e.”

Another challenge wasn’t so much the novelty of the supercomputer, but the fact that CT scans are three-dimensional. Models are typically trained on two-dimensional images, Claessens explains. “But those methods didn’t yet exist for 3D images. You can’t just feed 3D data into a 2D model—it doesn’t fit. You have to come up with clever solutions to prevent the computer’s memory from overloading. That can happen even with a supercomputer. We designed the model so that it first looks at small 3D blocks and then finds connections across the entire volume.”

For humanity

And then there’s the matter of collecting 250,000 CT scans. “We use publicly available datasets,” says Van der Sommen. “Some come from challenges where researchers each develop a model using an open dataset.” Without all that public data, the foundation model wouldn’t have had enough material to learn from.

Now that the model exists, Van der Sommen and Nuijten want to give something back to the world. They are making the model fully open source — at least for researchers and clinicians — so it can be fine-tuned for specific applications. This way, the technology can reach hospitals more quickly, which is what motivates them.

“Hopefully, the model can also help people with rare forms of cancer,” says Van der Sommen. “For those cancers, there often isn’t enough data to train a model properly. With this foundation model, that becomes possible.” Nuijten adds, “That’s what makes me so happy about this work. You’re making the difference between ‘this will never work’ and ‘this is going to work.’”

This article was translated using AI-assisted tools and reviewed by an editor.

Discussion