Robots and people

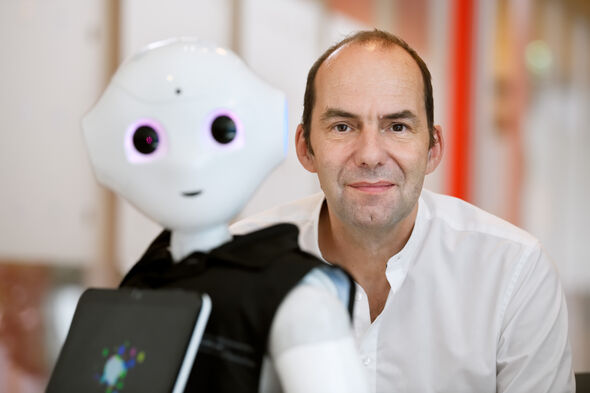

Who is liable for accidents involving self-driving cars? How should a robot behave towards children and people in need of care? To what extent should we allow smart algorithms to make political choices? Artificial intelligence (AI) is developing at a rapid pace, and more and more people will be affected by it in their daily lives. That is why the focus of attention within Eindhoven’s AI institute EAISI doesn’t lie exclusively with matters of technology. Cursor spoke to EAISI’s quartermaster Wijnand IJsselsteijn, professor in the philosophy of technology Vincent Müller, and young researchers of the Social Robotics Lab about the human and moral implications of artificial intelligence.

In his capacity as professor of Cognition and Affect in Human-Technology Interaction, Wijnand IJsselsteijn represents the ‘human-orientated’ pillar within TU/e’s new AI institute. This side of research is represented primarily by his own Human-Technology Interaction group, and by the Human Performance Management and Philosophy & Ethics groups (all of the department of IE&IS). Furthermore, much research conducted at the department of Industrial Design can be placed in this category, as well as several projects within the department of the Built Environment.

According to IJsselsteijn, the fact that both he and university professor Anthonie Meijers (until recently head of the Philosophy & Ethics group) take part in the new Gravitation program Ethics of Socially Disruptive Technologies is a nice combination of circumstances. “That offers us possibilities to give a bit more body to the ‘human-centered’ side within EAISI,” he says. “You shouldn’t just approach artificial intelligence as a technical problem; the socio-scientific and philosophical sides are very important as well. You see that in the way society reacts to new developments in AI. It’s the subject of a very lively debate.”

TU/e invests big in artificial intelligence: last summer, it was announced that the newly established Eindhoven Artificial Intelligence Systems Institute (EAISI) will recruit fifty new researchers, on top of the hundred researchers already working in this field. The university intends to invest a total sum of a about one hundred million euro’s, which includes turnover from the government’s sector funds and the costs of the renovation of the Laplace building, which is to become the permanent location for EAISI, currently located in the Gaslab.

Wijnand IJsselsteijn is one of EAISI’s quartermasters. The others are associate professor Cassio Polpo de Campos (Information Systems, Computer Science) and full professors Paul van den Hof (Control Systems, Electrical Engineering, and one of the initiators of TU/e’s High Tech Systems Center), Nathan van de Wouw (Dynamics & Control, Mechanical Engineering), and Panos Markopoulos (Design for Behavior Change, Industrial Design).

In general, IJsselsteijn sees that people are very wary of artificial intelligence. “Fortunately, we are still in time to discuss the ethical implications of AI – whereas we missed the boat somewhat with big data, where companies such as Facebook are now being scrutinized by politicians. But data will show its teeth with AI, which is why I believe that it’s essential to think about the implications.”

On the other hand, the professor believes that people tend to scream blue murder rather quickly nowadays when it comes to AI. “I don’t see machines taking over power just now, as some people predict. Someone like futurist Ray Kurzweil calculates when computers will have a computational power that exceeds that of humans, and claims that computers will become more intelligent than people when that moment arrives – that conclusion is far too easy. Intelligence is so much more than computational power. AI is much more limited. The idea that fully autonomous AI supposedly will develop a hunger for power seems like a rather special assumption to me. Being intelligent is not the same thing as wanting something. And we can equip AI with a system of values that excludes that possibility. We ourselves are in charge. I think of AI more as a supplement to our own intelligence in very specific areas, as a powerful cognitive tool.”

IJsselsteijn is also aware of moral consequences of not using artificial intelligence. He believes that the moral debate shouldn’t just focus on the dangers of technology such as AI, but on the advantages as well. “Think of self-driving cars. You could argue that there are risks involved because they don’t function optimally yet. They will probably never function perfectly. But you have to contrast that with the fact that according to current statistics, a million people die in traffic accidents each year – mostly as a result of human errors. If you can reduce that number with self-driving cars, I consider it a moral obligation to do so. Even when the focus will lie with the cases where the self-driving car is responsible for the accident. We just seem to be less forgiving of mistakes made by machines than of those made by people.”

The latter phenomenon is one of the things IJsselsteijn would like to further investigate within EAISI. “On the one hand, people perhaps expect too much from ‘AI agents,’ and aren’t very tolerant because of that, on the other hand it’s possible to have too little faith in AI, which means they don’t make optimal use of the system. If you turn of the system because you don’t believe your car knows better when to make an emergency stop than you do, we’re not getting anywhere. That, and for example the interaction between self-driving cars and pedestrians, is something I would like to investigate with the colleagues of the Automotive Lab.”

IJsselsteijn is also intrigued by the question how AI should function in a world where not everyone follows the rules that closely. “Take the traffic situation in a city like Amsterdam for example. Pedestrians and cyclists claim right of way there, even when they don’t have it. Eventually, a self-driving car has to be able to deal with that. In a way, this would mean that they need to be flexible, able to assess the intentions of other road users, and able to communicate their own intentions. This is in fact a social negotiation process.”

Other issues IJsselsteijn would like to work on within EAISI include intelligent ‘interfaces’ such as Google Home/Assistant and Amazon Echo/Alexa, which are rapidly gaining in popularity. “Alexa is a female voice per definition. Looking at it from a feminist perspective, you could wonder whether such a ‘household assistant’ should be a woman. And how do you manage the expectations people have of such a voice assistant? I once worked on a project with Philips with an intelligent agent in the shape of a small dog. That’s a very clever interaction metaphor, because people immediately realize that a dog doesn’t understand everything literally, only certain commands. And it’s not really a problem ethically speaking to boss around a dog – unlike a woman.”

In addition, IJsselsteijn is interested in possible implementations of AI in the medical sector. “Think of ‘personalized medicine,’ and for example how you encourage people to adopt a healthier lifestyle. There aren’t just technical aspects connected to that, but psychological and ethical ones as well. Are you allowed to try and influence people with intelligent systems? And what consequences does that have for freedom of choice? Industrial Design most certainly is a partner in that when you’re talking about ‘quantified self’ and human health and vitality, but so is Built Environment when you take into account environmental factors and how the built environment influences our behavioral choices.”

Social Robotics Lab

Most people will probably associate artificial intelligence with robots. Within the Social Robotics Lab, a joint initiative of the Human-Technology Interaction (IE&IS) and Future Everyday (Industrial Design) groups, researchers investigate how robots can be adapted to function in a human environment. This means, for example, that robots have to develop some kind of social intelligence – a ‘feeling’ for what people think is or is not appropriate. With an eye on the future development of completely autonomous robots, the researchers also investigate how to combine artificial and human intelligence in a smart way. Cursor spoke to PhD candidates Margot Neggers (HTI), Maggie Song and Tahir Abbas (both from the department of Industrial Design) about their projects.

Robots in your ‘personal space’

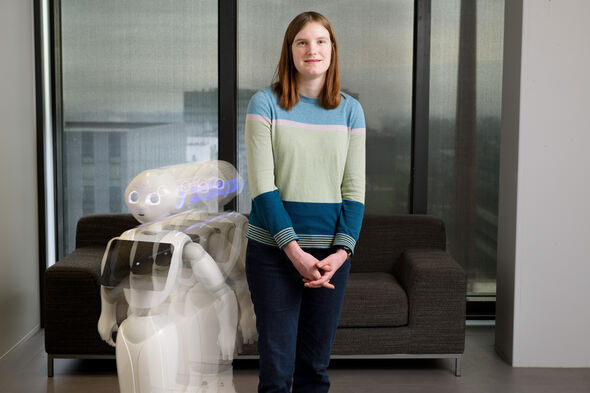

Margot Neggers is a PhD candidate in the Human-Technology Interaction group. Within the Social Robotics Lab, she works with two small NAO’s with a height of 60 centimeter called Bender and Marvin, and a Pepper robot, which is twice as large and goes by the name of Eve (after the robot from the computer-animated movie WALL-E).

Her modest lab in Atlas consists of a small corridor where test persons can sign up on the computer, a small ‘control room’ from which the researchers can observe the experiments, and the actual lab space with a desk with a computer on it, and a sofa with its back placed against a window that offers a splendid view of Eindhoven.

Bender and Marvin are lying lazily on the sofa while Eve stands motionless in the middle of the modest space with her head bowed. But that changes when Neggers ‘wakes her up’ with a single push of a button. Instantly, the added value of a ‘humanoid’ robot as ‘carrier’ of artificial intelligence becomes apparent: her large eyes that move around and seek contact the moment you say something, combined with her body movements make you feel that Eve is a person. Something halfway between a human and a pet, as it were. It’s not difficult to imagine that robots such as Eve can make the lives of elderly, dependent people more agreeable not only on a practical level, but on a social level as well.

Inside, robots like these are very similar to the by now common voice assistants of Google, Amazon, Microsoft, Apple and Samsung that come with smartphones, ‘smart speakers’ and refrigerators even, the PhD candidate admits. “That’s why you always need to ask yourself what added value the robot’s ‘body’ represents.” Robots like Eve are already used in public life in Japan in particular, Neggers says. “At train stations, they provide travelers with information about departure times, and they help people in stores find certain products.” The fact that they can move around on wheels is very convenient in a store of course, and they immediately catch the eye at a train station. And it’s also nice to communicate with a partner who looks you in the eye to show you that she’s paying attention.

Neggers investigates how robots should behave when they share a space with humans. To find out, she asked several test persons to indicate when they felt that Eve came uncomfortably close. “It turns out that the distance she had to maintain was in fact similar to the ‘personal space’ you usually keep from other people. People are least comfortable when the robot moves behind them.” The next step is to make robots take moving people into account, she says. “We will specifically look at situations where people and humans cross paths. Should the robot move faster, slow down, stand completely still, or walk around humans? We would like to know what people prefer.”

Because ultimately, it’s very important that people will trust robots. “Otherwise, you can develop the most wonderful robots, but they’ll never be used.” The PhD student did notice already that the circumstances are of crucial importance for that trust. “The robot sometimes moved very close to the test persons during the experiments. Personally, I found that a bit scary, but the test persons weren’t put off. They indicated that they trusted the university and me as an expert. But when you’re outside and suddenly run into a robot you’ve never seen before, you don’t know whether it belongs to a trustworthy organization. That’s another interesting issue to look into.”

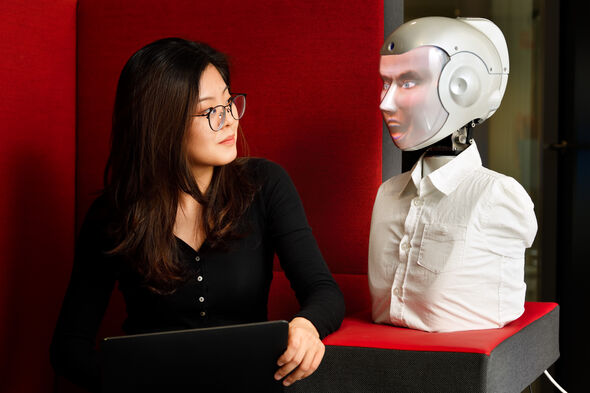

Encouraged by a robot

Maggie Song will also obtain her doctorate within the Social Robotics Lab, but at the department of Industrial Design. There, she conducts research into the requirements social robots have to meet if you want to use them with children. In one of her projects she investigated how these robots can be used to motivate children to do their best for music lessons. For this purpose, she uses a ‘SociBot,’ a robot designed by the company Engineered Arts with a very lively facial expression. That expression is projected onto the face from the inside, and this offers an endless range of possibilities to act out several different characters.

“Children often find music lessons fun and exciting in the beginning, but eventually it’s also a matter of spending many hours practicing,” the PhD candidate explains. The idea is that having a robot present during practice sessions to provide feedback can help motivate the children. “Parents prefer not to be overinvolved, because they don’t have the time, or because it’s bad for the relationship with their child.”

During experiments with children from Eindhoven between the ages of nine and twelve, Song tested two different roles for the SociBot - evaluative (with specific tips) and non-evaluative (as a supportive audience). “We wanted to know if children interpret these roles in the same way, and that turns out to be the case.”

The role of artificial intelligence, incidentally, is still very small at this stage, she admits. “We used the so-called ‘Wizard of Oz’ method - in which we sat in a separate room and made the robot give preprogrammed responses.” According to Song, it’s too early to say whether the robot’s encouragements have a positive effect on the children’s musical accomplishments. “We are still analyzing the results, together with a music teacher.”

The robot as gateway to crowd workers

Tahir Abbas from Pakistan is a third-year PhD candidate at Industrial Design. Within the Social Robotics Lab, he conducts research into the (im)possibilities of crowdsourcing for AI applications. The principle of crowdsourcing of flexible online labor forces, who are available 24/7 because the pool of workers is spread over different time zones, is advancing rapidly. Via Amazon Mechanical Turk for instance, where you can post so-called Human Intelligence Tasks, to which the available crowd workers can respond instantly.

In Abbas’ experiments, the user communicates with a social robot, whose answers are in fact provided by crowd workers. The robot initially functions simply as an interface, as a link between the ‘client’ and a pool of anonymous labor forces. The PhD candidate from Pakistan tells about an experiment with an actress, who pretended to be a student suffering from stress and loneliness, and who wanted to talk about that with a life coach.

“The hired crowd workers could see and hear her with the robot’s camera, which allowed them to respond to her questions directly. The responses they typed were then read to the actress by the robot.” To accelerate the process, it’s also possible to post the question among several labor forces. Abbas and his colleagues tested this with 1, 2, 4 and 8 crowd workers and concluded that the responses came significantly faster with more labor forces, while the quality remained the same.

In time, this system, also referred to as ‘human-in-the-loop,’ can be equipped with actual artificial intelligence, Abbas explains. “We are now trying to make the robot, a Pepper, give short responses, purely to buy the active labor forces more time. The next step could be to build in a self-learning algorithm that is able to independently give a correct answer to more and more questions.”

The ethics of artificial intelligence

Vincent Müller is an expert in the field of the theory and ethics of artificial intelligence. Since last May, the professor of Philosophy of Technology has been working in the group Philosophy & Ethics as successor of university professor Anthonie Meijers. The professor from Germany also holds positions at the university of Leeds and the Alan Turing Institute in London. His other positions include that of President of the European Society for Cognitive Systems.

The age of artificial intelligence has only just begun, Müller emphasizes. “Artificial intelligence will change our society to a greater degree than the rise of computers in the last three decades. When you compare it to the industrial revolution, we’re only in the phase of the first steam engines at this point. People referred to the first cars as ‘horseless carriages,’ just as we’re now talking about ‘driverless cars.’” What he means to say is that just as we no longer associate cars with horse carriages anymore, future generations will not realize that cars needed a driver once.

However, it remains to be seen whether our current framework of norms and values will provide a good basis for our dealings with this kind of far-reaching artificial intelligence. “On the one hand, ethics is about weighing advantages and disadvantages, and costs and benefits, and on the other hand it’s about certain absolute values. For instance, we find it unethical to kill an innocent person so that a group of other persons can benefit from that, while you might make that choice based on a rational analysis of costs and benefits.”

The greatest danger of artificial intelligence is perhaps that we shift responsibility to anonymous, untransparent systems

Our ideas about those values grew historically, but perhaps they aren’t applicable to artificial intelligence, the philosopher explains. We want someone to be able to justify his choice, but what if that ‘someone’ is a smart machine? When he recently applied for a credit card online, his Dutch bank refused to honor his request, he says. A typical case of ‘computer says no.’

“I didn’t receive any explanation about why my application was rejected, while I most certainly would have demanded one if I had sat across a bank employee. And according to the new European privacy regulation, I’m entitled to such an explanation.” That General Data Protection Regulation (GDPR) needs to ensure that organizations can’t get away with the excuse that this is simply how ‘the system’ decided, he explains. “That is perhaps the greatest danger of artificial intelligence: that we shift responsibility to anonymous, untransparent systems.”

The requirement that AI must be 'explainable' is therefore crucial, Müller says. “Artificial intelligence will discriminate in the same way we do if we feed the system data from the past. The AI will think this is normal. We really need to be careful that our subconscious discrimination in particular won’t be copied by intelligent systems.”

In Müller’s argument, the consequences of artificial intelligence are closely linked to collecting personal data and the extent in which we do this in a responsible, privacy-respecting way. “Artificial intelligence will become more and more inextricably linked with collecting and processing of data. Take this conversation for example, which you’re recording. We can’t foresee what information might be extracted from it in the future with the use of AI. Perhaps it could be deduced from my way of speaking that I will develop Alzheimer’s in twenty years, for example. That’s not a phantasy: it appears that the very early stages of Parkinson’s disease can be recognized by the way someone walks.”

Besides the problem of AI as ‘black box,’ there is the question to what extent the role of people will change in a world filled with artificial intelligence that might be smarter than us. “Simply put, the fact that we’re in charge here on earth is because we are the smartest beings. That will change when machines become smarter than humans, and that’s already the case in certain areas. In a way, we lose control because of this, if only because we let computers make decisions we can no longer account for, because we don’t know exactly why the AI gave a certain advice.”

What new rules should be introduced in a world with a dominant role for artificial intelligence? Müller believes it’s too early to say too much about that. “We still don’t understand enough about the impact of artificial intelligence. We will have to follow the developments closely, and continuously ask ourselves whether the current laws and regulations still satisfy. In particular with regards to liability. Are the ‘passengers’ responsible for accidents with a self-driving car, or the manufacturer? I think the latter, but the law in Italy, for example, explicitly requires that there always needs to be a driver present in a car. That’s a good example of a law that needs to be adapted for autonomous vehicles.”

On the other hand, the GDPR is a major step into the right direction in the area of privacy in times of artificial intelligence, according to the philosopher, who himself is a member of the advisory committee of AI4EU, a European platform. The difference with the American or Chinese approach for instance is obvious. “AI4EU tries to position the European AI as ‘human centered AI,’ or ‘responsible AI.’ That’s why in Europe, we want to install ethics in artificial intelligence and robotics from the start.”

Discussion